Lightspeed Alert™ scans vast amounts of information and flags potentially concerning text or images, creating alerts that district and school staff then follow up on in the real world. However, not all alerts are the same. An alert that contains potential child pornography, for example, is quite different from an alert that flags two students using flirtatious language.

Lightspeed Alert recently added powerful new machine learning capabilities that classify each alert as of High, Medium, or Low concern. Districts that use Lightspeed Alert can take advantage of these capabilities by selecting which level alerts they would like to see in each of the categories Lightspeed Alert covers: Self-Harm, Violence, Bullying, and Explicit.

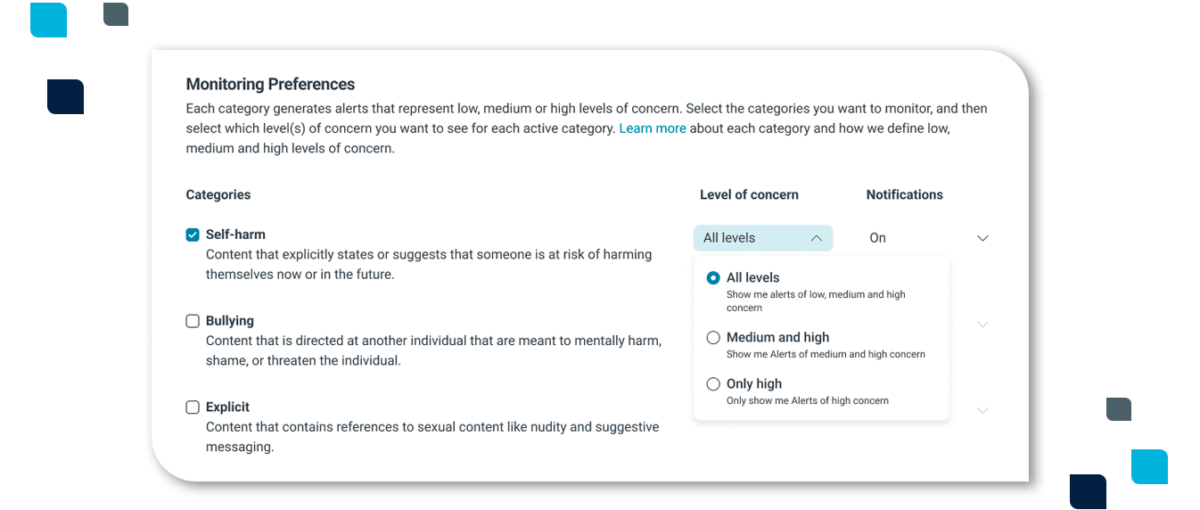

In the Explicit category, a district may wish to see only those high-concern alerts that include topics like child pornography while turning off Low Concern alerts that would include actions like flirting or talking about hugging or kissing. However, the same district may want to see High, Medium, and Low alerts in the Violence category to cover actions ranging from realistic threats of violence (High Concern) down to kicking or punching (Low Concern).

To make those adjustments, Lightspeed Alert administrators simply go to the Settings page and choose which level of alerts should be created for each category. By default, all levels of alerts would be shown. However, if a district wants to save time reviewing and closing out alerts of lower concern in certain categories, they now have the ability to make those adjustments.

These controls can be adjusted as often as needed. If a district had previously set the Bullying category to create only high-concern alerts but then noticed an uptick in bullying, they could keep a closer eye on potential bullying issues by adjusting their settings to create Bullying alerts at all levels of concern. No matter what a district’s preferences are, they can now take control and see only the alerts that match their priorities.